Some 18 months after the launch of ChatGPT, the first human-sounding large language model, hype over the potential of AI has only accelerated, even though it’s far from certain that it’s yet delivering on its considerable promise.

The rapidity of the improvement of generative AI (GenAI) – not only in generating realistic video of people eating noodles – makes it easy to get dazzled by the marketing, only to be disappointed when putting various types of AI into practice for more everyday matters.

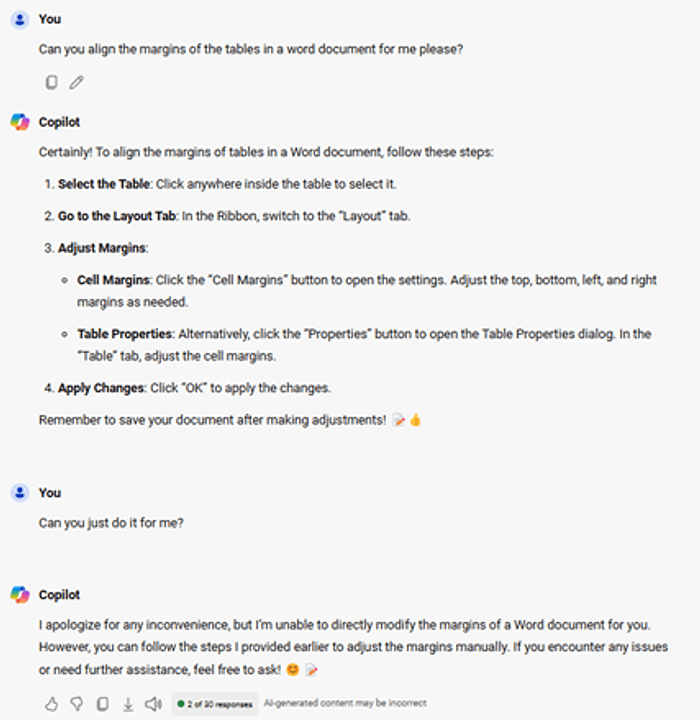

Consider the promise of LLM-powered AI assistants. I had the following exchange with Microsoft’s “Copilot”, after struggling with the simple task of aligning some table margins in a Word document and deciding to test it out. Bear in mind I’d spent half an hour trying to drag tables around, almost getting things aligned only to have the edges jump to an invisible grid that was in direct misalignment to the edges of every other table in the document.

Also keep in mind Copilot, a Microsoft AI that Microsoft kept bugging me with annoying ads to try, was embedded in the Microsoft Windows OS, and I was working on a Microsoft Word document.

Frustration, thy name is Microsoft Office.

It’s tempting to write off the whole concept of an “AI assistant”, if not AI in general (the “I” often seems a massive misnomer, as others have pointed out) after an exchange like this. But that would be to fall into the same trap as being wowed by a few impressive videos.

Marketing sees the promise, not the returns (yet)

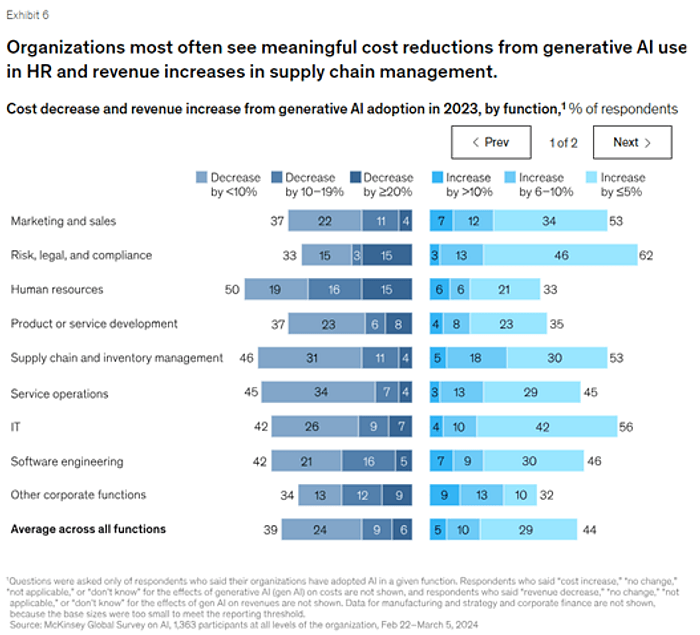

I wondered whether other people who’d applied AI at work had the same frustrations. Certainly, many more have been trying it: McKinsey research shows AI adoption has rocketed in recent months: 65% of firms are now using GenAI, nearly double the proportion that were doing so just 10 months earlier. By function, marketing is the clear leader, with more than one-third of respondents saying their marketing teams are using GenAI.

Interestingly, though, marketing is not the function getting the most returns from its deployment. McKinsey’s study shows that HR and supply chain management are seeing the best returns in terms of cost reductions and revenue increases respectively. These results suggest that a load of marketing execs (63% of those who’ve adopted it, perhaps) are likely to be shouting in frustration at GenAI’s cheerful obduracy.

I canvassed some of our clients – the marketing teams of major financial and professional services firms – to check whether this rang true, and to ask where GenAI was adding value or falling short. Most reported that it’s a great tool to help generate ideas, or crunch performance data, but so far can’t be relied on to produce audience-ready content. (Or, presumably, fix tables in MS Word.)

This dichotomy is not lost on the thought leaders at McKinsey, who point out the difficulty of moving from fancy-looking pilots to deployment at scale: just 11% of companies have done so, apparently.

These results suggest that a load of marketing execs (63% of those who’ve adopted it, perhaps) are likely to be shouting in frustration at GenAI’s cheerful obduracy.

Educate thyself

These decidedly mixed results show it clearly pays to take a measured approach to the application of AI either as a cost saver or revenue driver.

With this in mind, I’ve taken the first executive education course I’ve done for a while – Kellogg’s AI Applications for Growth. Not to blow our own trumpet too hard, but team N/N has also been “learning by doing”, having built our own AI-driven thematic analysis service, in collaboration with an AI service provider (In McKinsey’s rubric, that makes us “shapers”, as opposed to mere “takers” or “makers”).

Even after getting some hands-on experience, I reasoned that a more solid academic approach to understanding how AI can drive business growth would still prove useful.

Turns out I wasn’t wrong. Only six weeks in, I’ve already learned some invaluable tools and techniques to evaluate the applicability of AI (generative and otherwise) in marketing and beyond in a more systematic and rigorous fashion.

For instance, to choose from among the millions of potential applications of AI, it’s helpful to score dimensions of data richness within your company across specific areas (customers, operations, administration and risk, for example). Visualising this in a “data radar” chart can help show clearly where you would be best advised to focus your efforts.

Then when you’ve narrowed down a functional area, preparing a “canvas” – a type of structured planning document or visual template – can help make sure you’ve identified the specific jobs AI can do, the type of AI that would be best perform those functions, and where it might save most time or costs.

Further down the road, the evaluation process might involve rapidly iterating proofs-of-concept using an open-source toolkit like KNIME that can help model the data flows and analysis steps necessary for each task, to ensure you can generate valuable information or the desired outcome.

Even if, like me, you don’t have the skills or inclination to become a master of data science, getting a little insight into the concerns and practices of data analysts will help you evaluate the power of AI more realistically.

The “black box” nature of LLMs and GenAI, as opposed to machine learning, deep learning and earlier iterations of AI, make the questions you need to ask a little different, but the process of systematically figuring out what needs doing and whether AI can help is equally crucial.

The course and my research has also taught me there are many things that AI can’t do, yet. The exciting thing is that it might soon be able to – hopefully including fixing margins. And as it does acquire more capabilities, the companies that have established rigorous evaluation tools and practices will be best placed to judge its real impact, and invest in it (or not) for the right reasons.

World-class communications strategy and execution

Contact us to get started